This March I was fortunate enough to be invited to participate in a three night event put on by Liminal as part of the March Music Moderne event in Portland. In addition to providing projection mapping for "Capital Capitals," I worked with Bryan Markovitz to create an installation piece based on Gertrude Stein's novel "The Making of Americans."

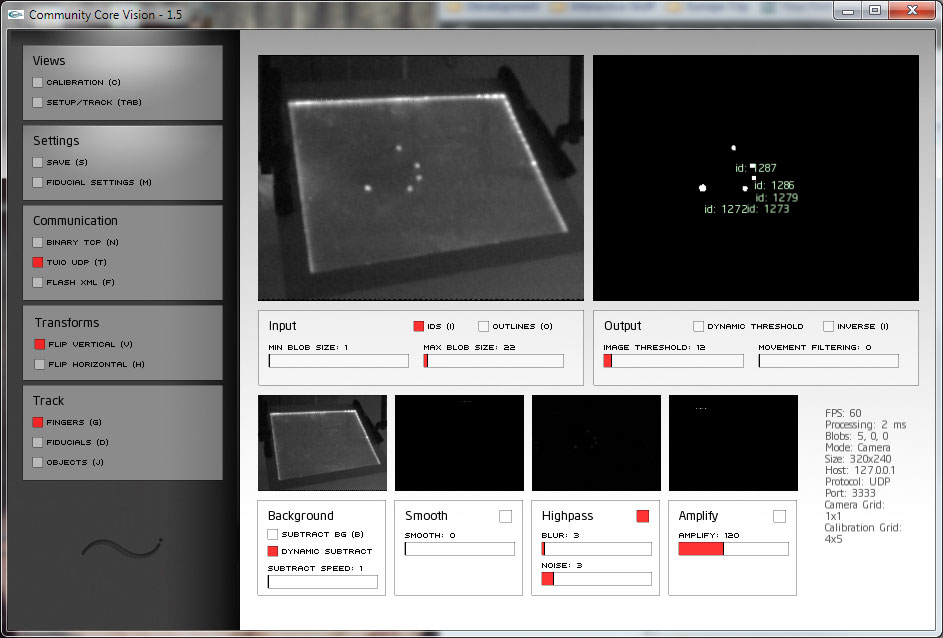

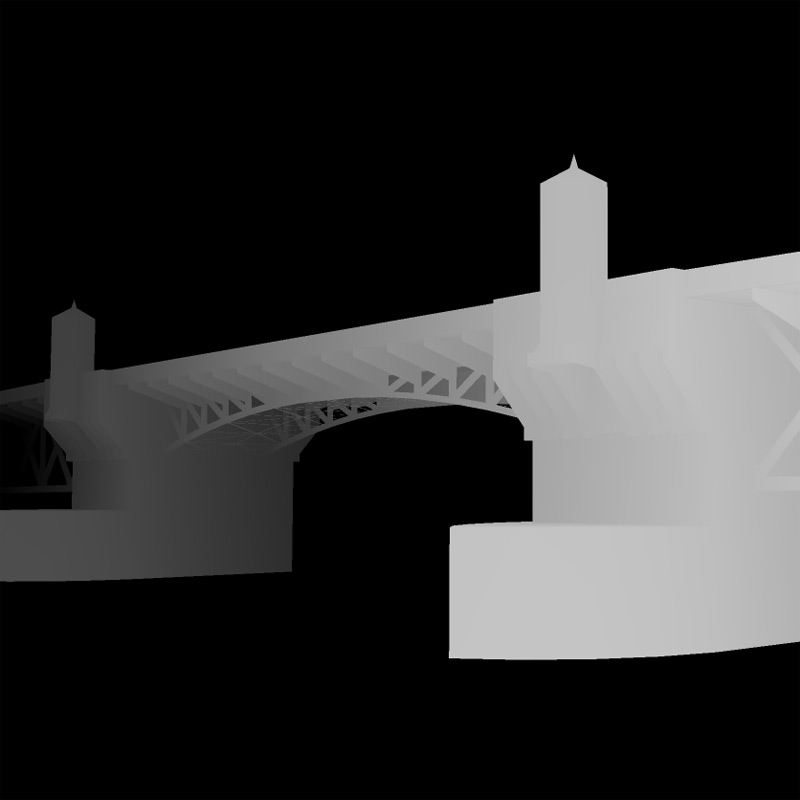

The installation included some custom software that I wrote which would drive projected content. This content would be projected onto several large pieces of art hanging from the walls of the room. This all seemed straight forward until we saw the room.. It was approximately the size of a shoe box, meaning my projector would only yield about a four foot wide image. This gave us very little flexibility for placement of the artwork since all the projected content would end up being constrained to a very small portion of the space. I was not happy about this.

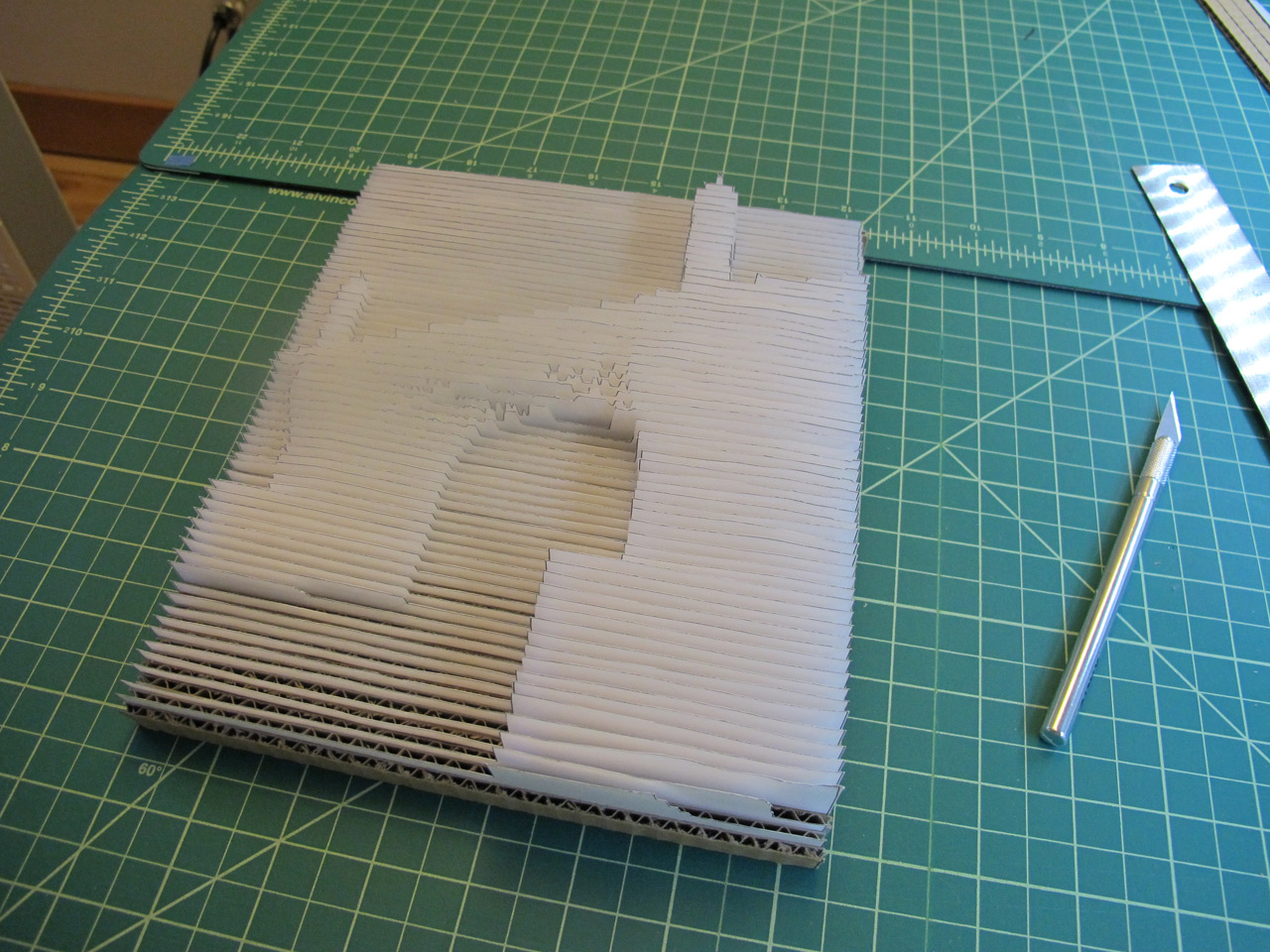

The most obvious solution I could think of was to use a single large mirror to increase the throw distance. After testing this idea it only added a marginal increase to the projection size, and due to the already limited space the physical setup was quite bulky. The idea of using a mirror stuck with me though. If a single mirror works, why not use several small mirror panels, each one aimed at a different location in the room? Thus was born the idea of an adjustable mirror array.

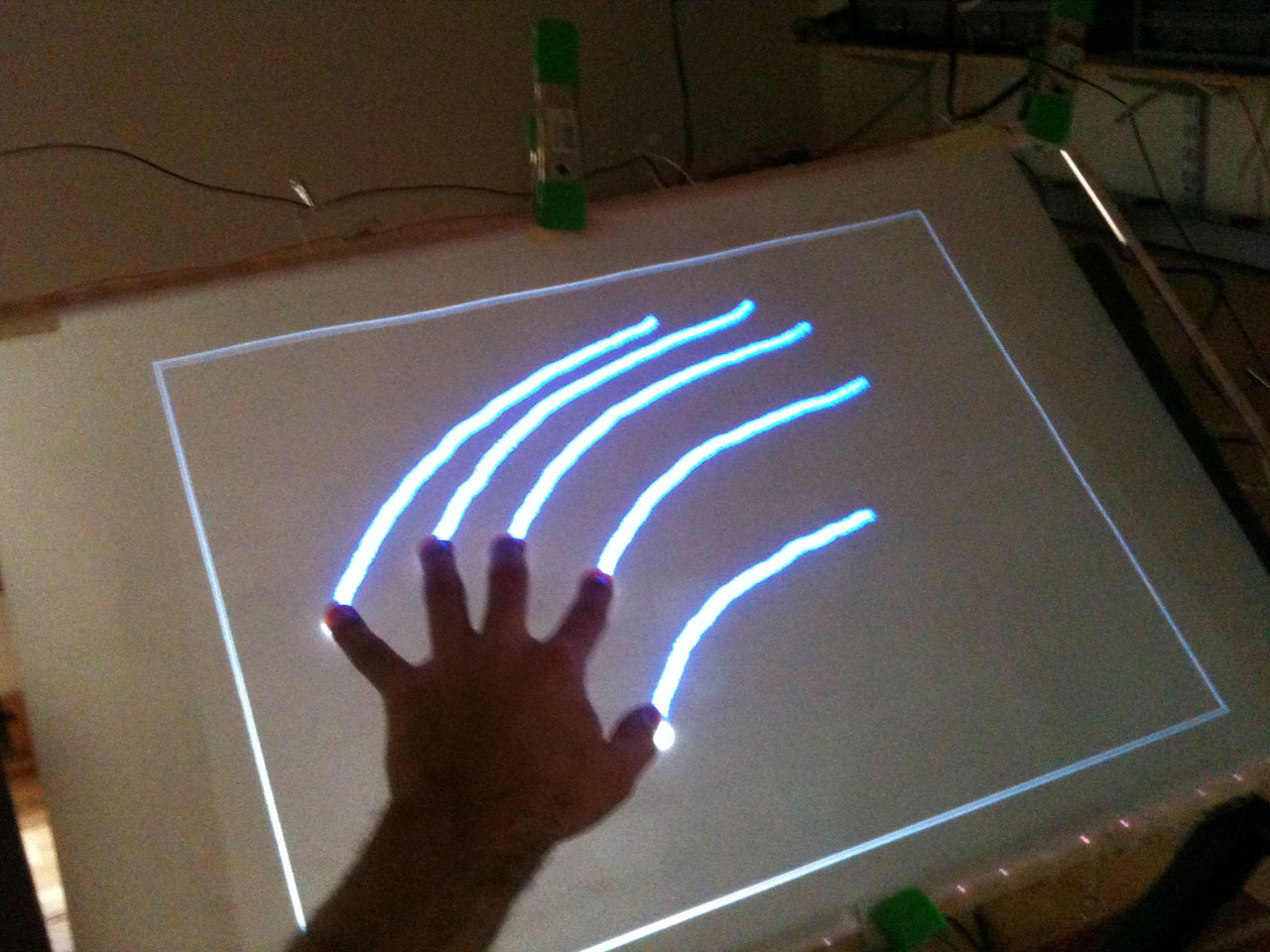

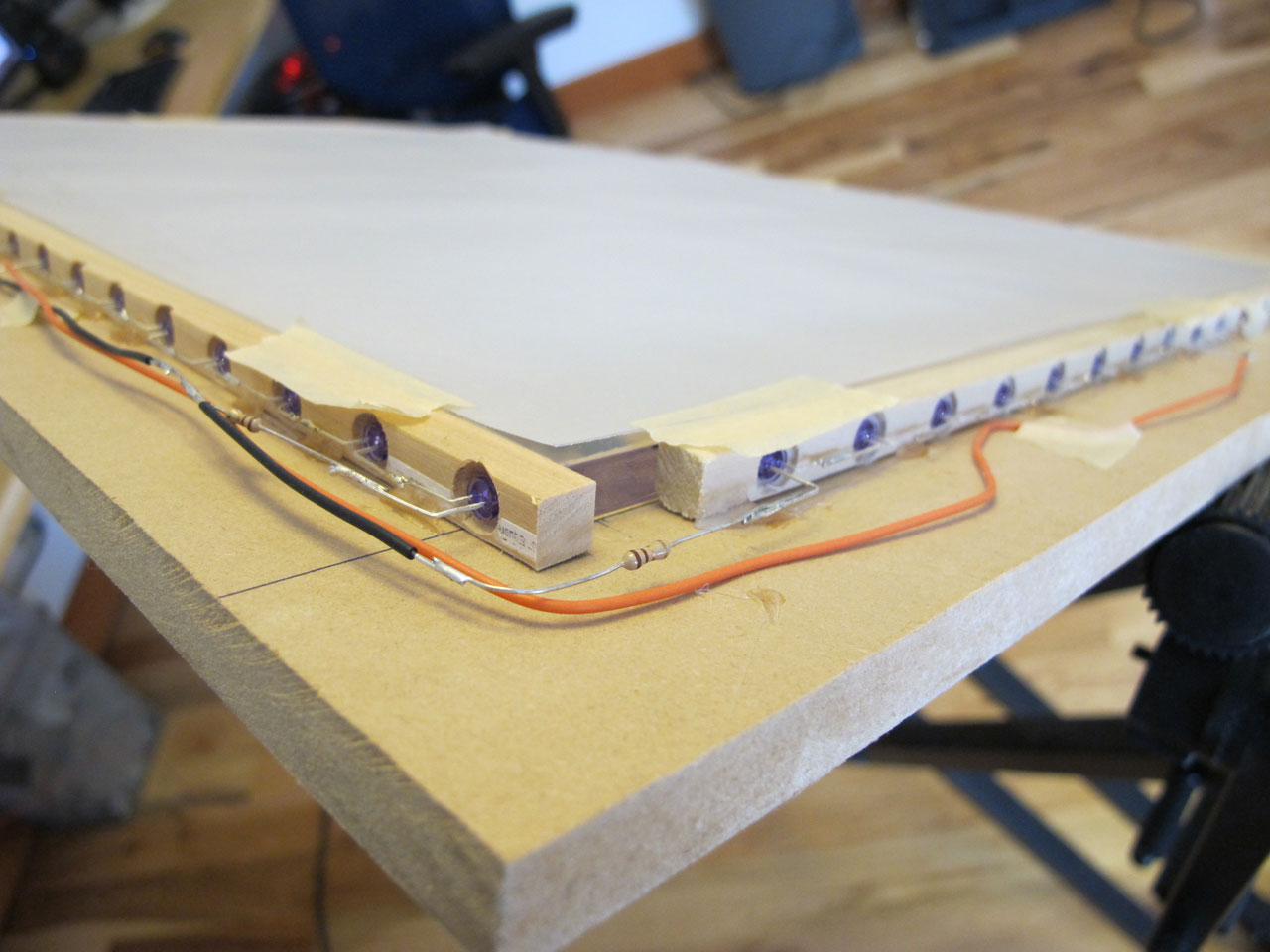

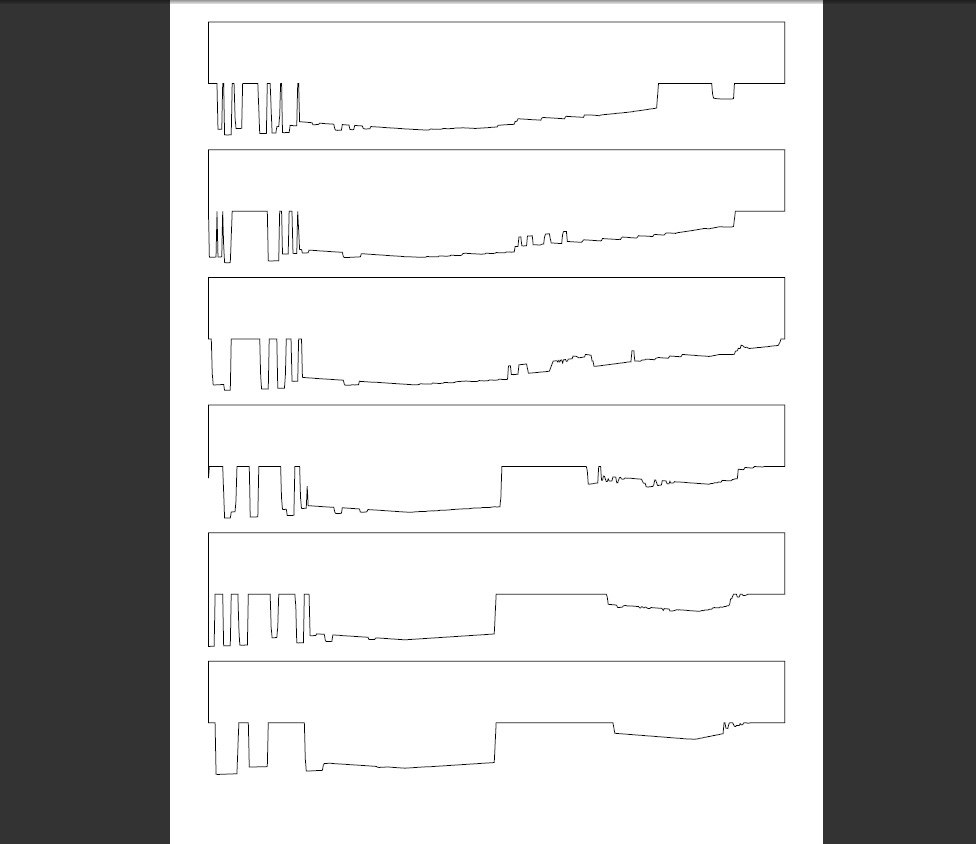

First I tested out the concept at home with a couple of mirrors, and once I was convinced that my projection mapping software worked through a mirror I set about building the adjustable mirror array. The array is a set of four small (about 5" square) mirror panels mounted on brackets I made that allow the mirror to swivel horizontally or vertically. The biggest downfall of this system is trying to drag the control points around when all the mouse movements are reversed.

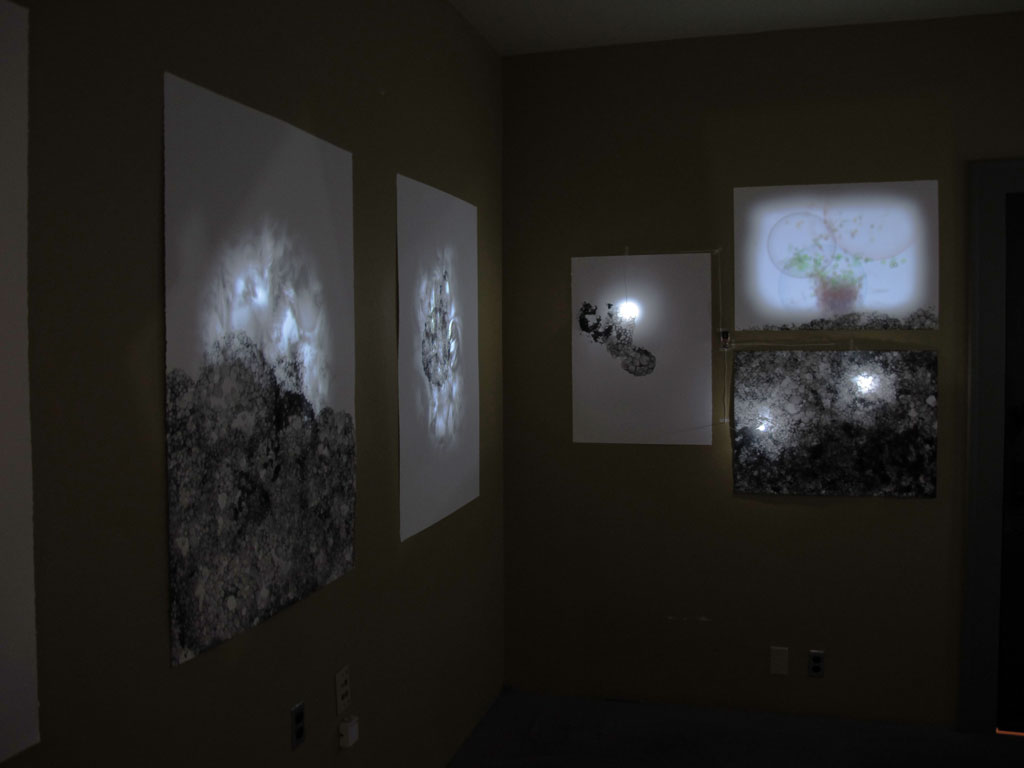

The final installation included four projected regions spanning three walls of the small room with an almost 180 degree spread around the space. Being able to project at such strange and diverse angles created a very nice effect since it removed the projector from the experience. In most cases, it's simple to visually track back from the wall and notice the source of the projection, but when the projector is tucked over in the lower corner of the room the projected regions seem to float on the walls as if by magic.